Problem Statement

I have more than 100 SQL tables that needs to have a "checksum" field something like HashByte MD5, the major problem is that the number of fields keep on changing on the fly and I need to fix my checksum field each time, I need a tool to handle the issues and I need to have a mechanism that can identify or apply the new HashByte changes.

Basic knowledge of HashByte

Hashbytes is a mathematical algorithm that comes in different variety that are known as… MD2, MD4, MD5, SHA, SHA1, SHA2_256, SHA2_512.

Click

here for more info.

Where can HashByte be used?

Hashbyte is mainly used in 2 areas

1 - Encryption : When encrypting credit card information, the developer now can save the encrypted information in a SQL table.

2 - Checksum : When having 100s of fields in a table that is a

SCD table, the 100s of fields can be replaced with one Hashbyte field.

http://sqlbanana.blogspot.ca/2014/07/checksum-hashbytes-and-slowly-changing.html

https://en.wikipedia.org/wiki/Slowly_changing_dimension

Fields in a Table

First you must know your fields very well you must identity ....

1 - External fields (Data related): fields imported from the outside world into your database/table

- [Natural Key(s)]

- Other data related fields

2 - Internal fields (

Not Data related): This is the list of the fields that have been defined for your table(s)

- [Surrogate Key] (Numeric)

- Checksum field "[iHashByteChecksum] [binary](16) NOT NULL"

- [FromDate], [ToDate] (SCD related)

- [BatchLogID], [RowNumber]

- [CreateDate], [UpdatedDate] , [UserName]

HashByte Creator Tool

I am providing few SQL scripts that you will have to read and run in sequence of the file number

- "010 CreateDB.sql"

- "020 CreateSchema.sql"

- "030 frmwrkDestinationTable.sql"

- "040 HashByteConfigTable.sql"

- "050 ufnCreateHashByteString.sql"

- "060 Test.sql"

- The file "030 frmwrkDestinationTable.sql" will play a role of a dimension table or any table that you need to have a Hashbyte field on it

- The file "040 HashByteConfigTable.sql" is part of the "HashByte Creator Tool" what it does it creates a configuration table, you must configure and set this table according to your sql tables that needs to be HashByted (in the BI world mainly the dimension).

Scenario: If a new field is added in the dimension table what happens?

Answer: The function picks all the fields except, Natural Keys, Internal fields, and Excluded fields, in that case the new added fields will get picked up in the next run, check the WHERE clause of the SQL statement.

FROM INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_SCHEMA = @SchemaName

AND TABLE_NAME = @TableName

AND COLUMN_NAME NOT LIKE '%checksum%'

AND COLUMN_NAME NOT LIKE '%HashByte%'

AND COLUMN_NAME NOT LIKE 'i%'

AND CHARINDEX (COLUMN_NAME , @SurrogateKeys) < 1

AND CHARINDEX (COLUMN_NAME , @NaturalKeys) < 1

AND CHARINDEX (COLUMN_NAME , @ExcludeFields) < 1

- The "050 ufnCreateHashByteString.sql" file creates a string that can be used in a Hashbyte function that looks like

ISNULL(RTRIM(CONVERT(varchar(11),[DataField2])),'')

+ ISNULL(RTRIM(CONVERT(varchar(23),[DataField3])),'')

+ ISNULL(RTRIM([DataField4]),'')

- The "060 Test.sql" does the test and wraps the above string into a Hashbyte string like..

UPDATE [fw].[frmwrkDestinationTable]

SET [iHashByteChecksum] = convert(varbinary(16) , HASHBYTES('MD5',

ISNULL(RTRIM(CONVERT(varchar(11),[DataField2])),'')

+ ISNULL(RTRIM(CONVERT(varchar(23),[DataField3])),'')

+ ISNULL(RTRIM([DataField4]),'')

))

Mandatory fields required

All of your SQL tables in the HashByteConfigTable must have the fields as mentioned

- One Natural Key field (external data)

- Data field (external data)

- [iHashByteChecksum] [binary](16) NOT NULL (designed by the developer)

- [iSurrogateKey] [Int] NOT NULL (designed by the developer)

Other mandatory

Depending of the type of the SCD the fields must be added by the developer in this case I picked type2.

- [iStartDateKey] --- SCD Type2.2

- [iEndDateKey] --- SCD Type2.2

[iVersion] --- SCD Type2.1

[iCurrentFlag] --- SCD Type2.3

Please note that the SCD is not covered in this blog

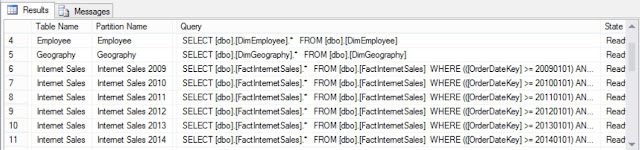

The Result

The results can be seen in the in he next screenshot.

Now with the help of the "HashByte Creator Tool" and the HashByteConfigTable you can configure as much as SQL tables that you want to be HashByted.

all you need to do now is to ...

- looping through the HashByteConfigTable record by record

- Call the function ufnCreateHashByteString on each loop

- Wrap the result in a HashByte format

- Execute the string

Click here to download the code.

References:

https://www.mssqltips.com/sqlservertip/2543/using-hashbytes-to-track-and-store-historical-changes-for-sql-server-data/

https://www.mssqltips.com/sqlservertip/2543/using-hashbytes-to-track-and-store-historical-changes-for-sql-server-data/

http://www.sqlservercentral.com/articles/Data+Warehousing/69679/

https://blogs.technet.microsoft.com/srd/2013/11/12/security-advisory-2880823-recommendation-to-discontinue-use-of-sha-1/

http://www.mathstat.dal.ca/~selinger/md5collision/

http://www.links.org/?p=6