1.“Excel File” (Source)

2.“OLEDB” (Destination)

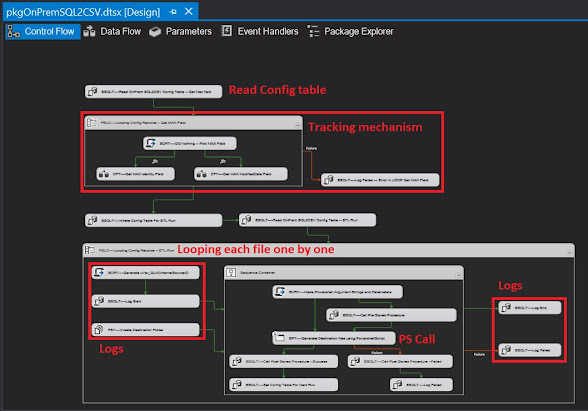

Package Flow

The package has 2 main section, one is to

create backup folder, clear Expressions, and some other variable settings, the

second one is to loop through each excel (97-2003) Files one by one and loop

through each Sheet one by one, do the ETL and finally move the file to the

backup folder, I will not explain the above two section except how I set the second “For Each Loop” in SSIS. I have added a T-SQL script to create the destination

table (look for DestinationClientTable.sql file)

After the first

loop you will see a .Net code object script “SCRIPT-----Get The List of Excel Sheets” that will extract the list

of excel sheets and place them into the variable “uVar_ExcelSheetObjectList”

which is a SSIS object variable.

The .Net code that loops through the sheets in each excel files goes like this.

Public Sub Main()

Try

'--------------------------------------------------------

'

User::uVar_ExcelConnectionString

'

User::uVar_ExcelSheetObjectList

'--------------------------------------------------------

'---

Added by SQL Data Side Inc. Nik-Shahriar

Nikkhah

' Don't

forget to add >>>>>

Imports System.Data.OleDb

Dim excelConnection As OleDbConnection

Dim connectionString As String

Dim tablesInFile As DataTable

Dim tableCount As Integer = 0

Dim tableInFile As DataRow

Dim currentTable As String

Dim tableIndex As Integer = 0

Dim excelTables As String()

Dim LoopForNumnberOfRealTabs As Integer = 0

connectionString = Dts.Variables("User::uVar_ExcelConnectionString").Value.ToString

excelConnection = New OleDbConnection(connectionString)

excelConnection.Open()

tablesInFile =

excelConnection.GetSchema("Tables")

tableCount =

tablesInFile.Rows.Count

For Each tableInFile In tablesInFile.Rows

currentTable =

tableInFile.Item("TABLE_NAME").ToString

'str =

tableInFile.Item("TABLE_Type").ToString

'str

= tableInFile.Item("TABLE_SCHEMA").ToString

'str

= tableInFile.Item("TABLE_CATALOG").ToString

currentTable =

currentTable.Replace("'", "")

If Right(currentTable, 1) = "$" Then

LoopForNumnberOfRealTabs +=

1

ReDim Preserve

excelTables(LoopForNumnberOfRealTabs - 1)

excelTables(LoopForNumnberOfRealTabs - 1) = currentTable

End If

Next

excelConnection.Close()

excelConnection = Nothing

Dts.Variables("User::uVar_ExcelSheetObjectList").Value = excelTables

Dts.TaskResult = ScriptResults.Success

Catch ex As Exception

Dim strEX As String

strEX = ex.Message.ToString

Dts.TaskResult = ScriptResults.Failure

End Try

End Sub

------------------------------

Then comes the second loop that uses the “uVar_ExcelSheetObjectList”

package variable (populated in the “SCRIPT-----Get The List of Excel Sheets” step)

which contains the list of the excel sheets and loops through them one by one, on

each loop it places the excel sheet name into the “uVar_ExcelSheetName”

variable that will be used in the source object in the DFT.

With 2 steps you

can set up the second loop.

1.Collection

2.Variable Mapping

Please check the DFT settings

How To Test?

- Set your SQL server name and SQL DB Name in

the project parameters.

- Create the destination table (You can use

DestinationClientTable.sql).

- Create a folder in my case it is

“C:\P_C\2018\SQL2016\SSIS\Exl2SQL-Exl97-2003”.

- Copy the above Path in the

“uPara_MainFolderPath” variable.

- Create 2 Sub folders 1 – SampleFile 2-

ToBeProcessed.

- You must have your SampleFile97-2003.xls (97-2003

format) file copied in the SampleFile folder (I have provided two files, one of

then has multiple sheets “SampleFile97-2003 - MultipleSheet.xls”).

- Copy all of your source files into the

ToBeProcessed folder or you can use the SampleFile97-2003.xls to get the ball

rolling (or use “SampleFile97-2003 - MultipleSheet.xls”).

- Run your SSIS package

- Using windows explorer go to the same path

as defined in “uPara_MainFolderPath” variable, you will see a sub folder named

“BackupFolder”.

- Now your files have moved from

ToBeProcessed folder to the BackupFolder folder under todays

“…\BackupFolder\yyyy-mm\yyyy-mm-dd-HHmmss\”

- Also please check your destination table,

my sample uses the [dbo].[Client] table.

Please note if…

- If your ToBeProcessed folder (Source Folder)

is located on another folder/machine you can enter the valid path in the

“uPara_ToBeProcessedFolder” variable.

- If your Backup folder is located on another

folder/machine you can enter the valid path in the “uPara_BackupMainFolderPath”

variable.

References…

- http://plexussql.blogspot.ca/2010/04/looping-through-excel-files-and-sheets.html

- https://docs.microsoft.com/en-us/sql/integration-services/connection-manager/excel-connection-manager

- https://docs.microsoft.com/en-us/sql/integration-services/connection-manager/connect-to-an-excel-workbook

- https://docs.microsoft.com/en-us/sql/integration-services/extending-packages-scripting-task-examples/working-with-excel-files-with-the-script-task

- https://docs.microsoft.com/en-us/sql/integration-services/load-data-to-from-excel-with-ssis

- https://docs.microsoft.com/en-us/sql/integration-services/extending-packages-scripting-task-examples/working-with-excel-files-with-the-script-task

- http://www.madeiradata.com/load-data-excel-ssis-32-bit-vs-64-bit/