Objective

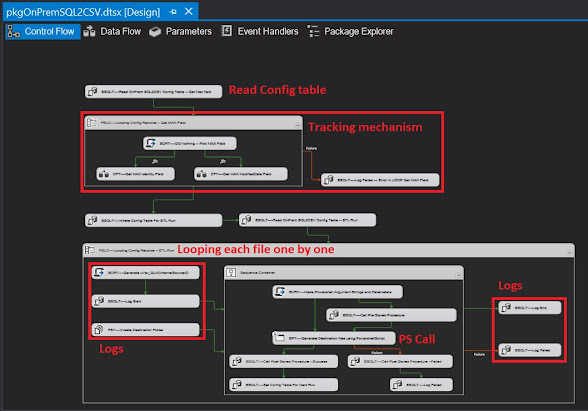

One of the general ETL practice that is needed in the cloud is to inject data from a CSV file to a SQL the file will be within Azure Blob Storage and the destination SQL is a Azure SQL Server. Once moving to the cloud the the ETL concept changes because of the large amount of data or large amount of ETL file loads and for that a automated/configured mechanism is required to load the hundreds and hundreds of files maybe an IoT data load might be a good example, an IoT service may have have more than one hundred devices, each device generates hundreds of files every day.

The solution is to create a config table in SQL that contained all the information about an ETL like

1 - Destination SQL table

2 - Mapping fields

3 - ...

4 - Source File Path

5 - Source File name and extension

6 - Source File Delimiter

7 - etc...

Requirements

The Azure Data Factory (ADF) will read the configuration table that contains the source and the destination information and finally process the ETL load from Azure Blob Storage (Container) to Azure SQL database, the most important part is that the config tale contains te mapping field in a JSON format in the config table

'

{"type": "TabularTranslator","mappings": [

{"source":{"name":"Prénom" ,"type":"String" ,"physicalType":"String"},"sink": { "name": "FirstName","type": "String","physicalType": "nvarchar" }},

{"source":{"name":"famille Nom" ,"type":"String" ,"physicalType":"String"},"sink": { "name": "LastName","type": "String","physicalType": "nvarchar" }},

{"source":{"name":"date de naissance" ,"type":"String" ,"physicalType":"String"},"sink": { "name": "DOB","type": "DateTime","physicalType": "date" }}

]}

'

Some of the config table are as mentioned

Source Fields

- [srcFolder] nvarchar(2000)

- [srcFileName] nvarchar(50)

- [srcFileExtension] nvarchar(50)

- [srcColumnDelimiter] nvarchar(10)

- etc...

Destination Fields

- [desTableSchema] nvarchar(20)

- [desTableName] nvarchar(200)

- [desMappingFields] nvarchar(2000)

- ....

- [desPreExecute_usp] nvarchar(50)

- [desPostExecute_usp_Success] nvarchar(50)

- ....

Please note…

- The DFT is called by a Master pipeline and has no custom internal logging system

- The three custom SQL stored procedures are not included in this solution

- Three custom SQL stored procedures can be used/set within the configuration table for each ETL , one for PreETL, second PostETLSuccess and Finally PostETLFailure

Do you want the code? Click here (From Google).